If you’re new to marimo, check out our GitHub repo: marimo is free and open source.

Since the release of 0.9.0, marimo has introduced robust support for building AI chatbots that solve two critical challenges in modern AI applications:

- Limited or imprecise knowledge through custom model support and tool calling

- Rich, interactive responses beyond plain text and markdown

In this blog post, we’ll dive deep into these capabilities, showcasing its flexibility and power through practical examples.

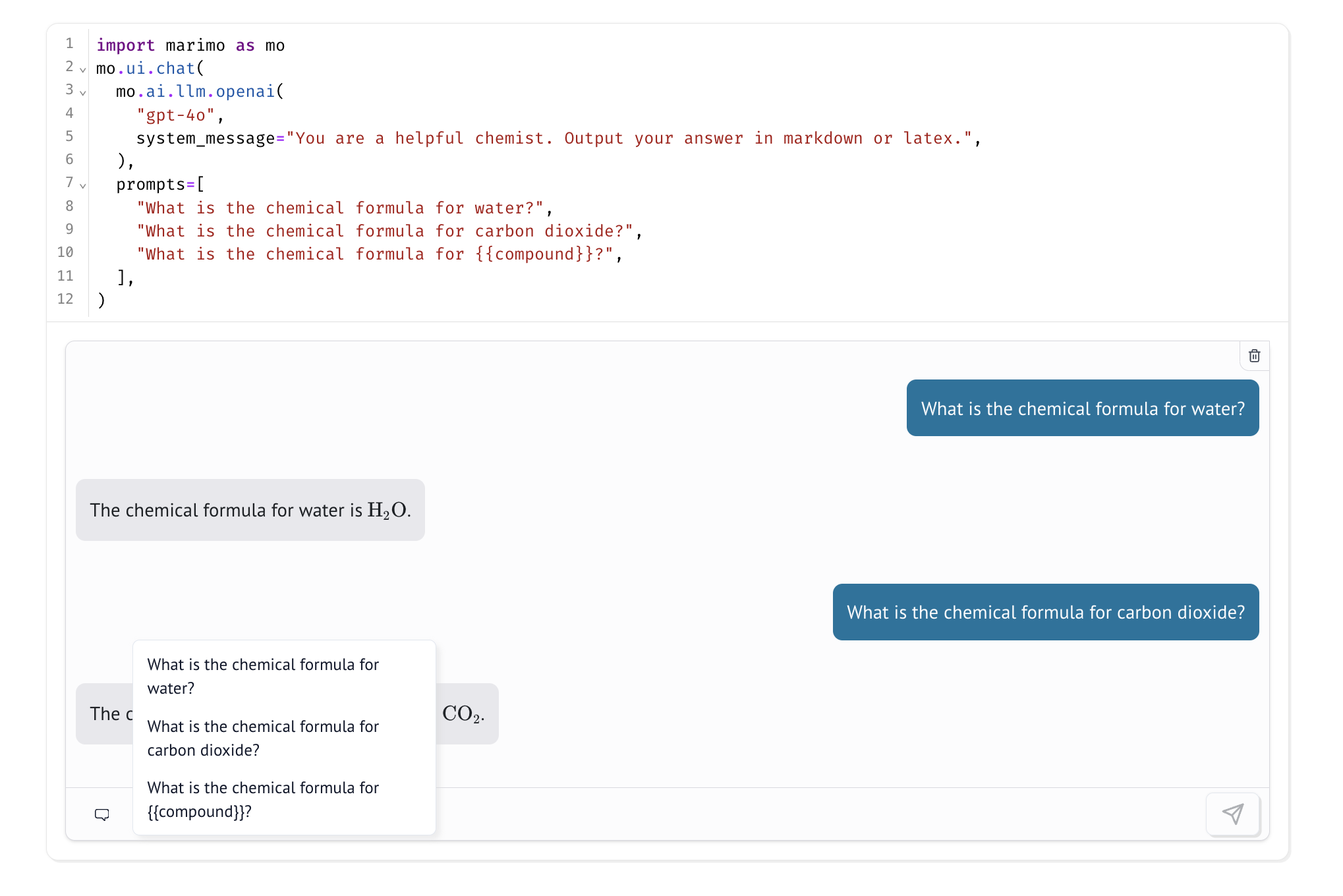

Just the basics: Creating a simple chatbot

Let’s start by creating a simple chatbot using a built-in LLM and mo.ui.chat.

import marimo as mo

mo.ui.chat(

mo.ai.llm.openai(

"gpt-4o",

system_message="You are a helpful chemist. Output your answer in markdown or latex.",

),

# Provide some sample prompts

prompts=[

"What is the chemical formula for water?",

"What is the chemical formula for carbon dioxide?",

"What is the chemical formula for {{compound}}?",

],

# Show some configuration controls to change temperature, token limit, etc.

show_configuration_controls=True,

)This code snippet creates a basic chatbot interface that allows users to input messages and receive responses from the LLM. We’ve included a few sample prompts to give users a starting point. Any prompt that includes a {{placeholder}} will create a form that allows users to fill in the placeholder with their own input.

Custom models

One of the most powerful features of marimo’s chatbot framework is its ability to work with custom models. This flexibility allows you to implement custom prompting strategies, tool calling, and more.

Here’s an example of how you might create a custom model:

import marimo as mo

def my_model(messages, config):

question = messages[-1].content

# Search for relevant docs in a vector database, blog storage, etc.

docs = find_relevant_docs(question)

context = "\n".join(docs)

prompt = f"Context: {context}\n\nQuestion: {question}\n\nAnswer:"

# Query your own model or third-party models

response = query_llm(prompt, config)

return response

mo.ui.chat(my_model)In this example, both find_relevant_docs and query_llm are placeholders for functions that you would implement to search your database and query your model. This approach allows for sophisticated information retrieval techniques like RAG (Retrieval-Augmented Generation) or calling out to specialized models.

Embracing multi-modal inputs

mo.ui.chat goes beyond text-based interactions by supporting multi-modal inputs. This feature opens up a world of possibilities for creating rich, interactive chatbot experiences. Let’s look at an example that allows users to upload an image and ask questions about it:

import marimo as mo

chat = mo.ui.chat(

mo.ai.llm.openai(

"gpt-4o",

system_message="""You are a helpful assistant that can

parse my recipe and summarize them for me.

Give me a title in the first line.""",

),

allow_attachments=["image/png", "image/jpeg"],

)

chatlast_message: str = chat.value[-1].content

title = last_message.split("\n")[0]

summary = last_message.split("\n")[1:]

with open(f"{title}.md", "w") as f:

f.write(summary)This code creates a chatbot that can process uploaded images of recipes. The LLM analyzes the image, extracts the recipe information, and provides a summary. The summary is then saved as a markdown file, demonstrating how you can use the chat responses for downstream tasks like report generation or database updates.

Generative UI and tool calling

marimo’s chat interface supports Generative UI - the ability to stream rich, interactive UI components directly from LLM responses. This goes beyond traditional text and markdown outputs, allowing chatbots to return dynamic elements like tables, charts, and interactive visualizations.

First lets load some data.

# Grab a dataset

import polars as pl

df = pl.read_csv("hf://datasets/scikit-learn/Fish/Fish.csv")Now, we’ll create two tools for our chatbot:

chart_data: Generates an Altair chart based on given encodings.filter_dataset: Filters a Polars dataframe using SQL.

To create these tools, we’ll use ell, a library for prompting and building tools for language models.

import ell

import marimo as mo

@ell.tool()

def chart_data(x_encoding: str, y_encoding: str, color: str):

"""Generate an altair chart"""

import altair as alt

return (

alt.Chart(df)

.mark_circle()

.encode(x=x_encoding, y=y_encoding, color=color)

.properties(width=500)

)

@ell.tool()

def filter_dataset(sql_query: str):

"""

Filter a polars dataframe using SQL. Please only use fields from the schema.

When referring to the table in SQL, call it 'data'.

"""

filtered = df.sql(sql_query, table_name="data")

return mo.ui.table(

filtered,

label=f"```sql\n{sql_query}\n```",

selection=None,

)Next, we’ll create a custom model wrapper that uses our tools:

@ell.complex(model="gpt-4o", tools=[chart_data, filter_dataset])

def analyze_dataset(prompt: str) -> str:

"""You are a data scientist that can analyze a dataset"""

return f"I have a dataset with schema: {df.schema}. \n{prompt}"

def my_model(messages):

response = analyze_dataset(messages)

if response.tool_calls:

return response.tool_calls[0]()

return response.text

mo.ui.chat(

my_model,

prompts=[

"Can you chart two columns of your choosing?",

"Can you find the min, max of all numeric fields?",

"What is the sum of {{column}}?",

],

)This advanced example demonstrates how to create a chatbot that can perform data analysis tasks. It can generate charts, filter data, and perform calculations based on user prompts. The use of tool calling allows the chatbot to execute specific functions and return rich, interactive outputs.

We’ve only scratched the surface of what you can do with marimo’s chatbot framework. To see more examples, check out the marimo examples repo.

Conclusion

marimo’s chatbot framework provides a powerful and flexible way to build AI-powered conversational interfaces. From simple text-based chatbots to complex, multi-modal interactions with Generative UI, the possibilities are vast. By leveraging custom models, tool calling, and marimo’s reactive runtime, you can create sophisticated chatbots that integrate seamlessly with your data and workflows, all with pure Python.

We’re excited to see what you’ll build with these tools. Whether you’re creating educational apps, data analysis assistants, or complex AI agents, marimo’s chatbot framework provides the foundation you need to bring your ideas to life.

Join the marimo community

If you're interested in helping shape marimo's future, here are some ways you can get involved: