Python notebooks with AI

Explore marimo's AI-powered features for Python development including TAB autocompletion, error auto-fixing, and integrated chat. Support for OpenAI, Anthropic, Google Gemini, and local models.

marimo is modern Python notebook. So that means ample integrations with AI tools. You have access to cutting edge coding agents, which in turn have access to a rich development environment that can benefit from interactive widgets as well as database connections.

AI integration for Python notebooks

marimo offers a few ways to integrate AI into your workflow. You can configure TAB-style autocompletion, you can autofix errors in a cell with rich diffs or you can use the chat interface in the sidebar.

- The TAB autocompletion goes beyond simple syntax suggestions. It understands your project context, imported libraries, and variable types to provide intelligent code completions that actually make sense for what you’re trying to accomplish. Instead of generic suggestions, you get contextually relevant code that fits naturally into your workflow.

- The error auto-fixing feature is particularly powerful. When your code encounters an error, marimo can automatically suggest fixes with visual diffs showing exactly what would change. This isn’t just about syntax errors - the AI understands logical issues, import problems, and even suggests more efficient implementations.

- The integrated chat interface lets you ask questions about your code, request explanations, or get help with specific Python concepts without leaving your notebook. You can highlight code and ask for optimizations, request documentation, or even ask for help implementing new features.

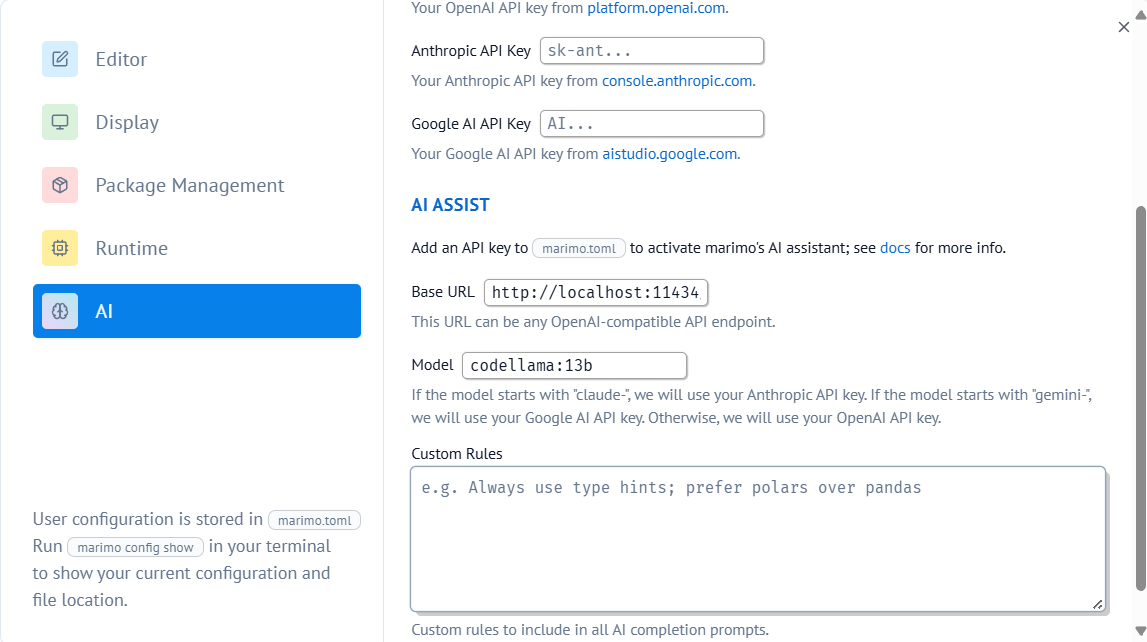

You can also add custom rules in your user settings, which allows you to make the LLM aware of your preferences. For example prefer polars over pandas is a common line.

Support for many LLMs

marimo has direct support for LLM models from OpenAI, Anthropic, Google Gemini and Ollama. However, marimo also supports any LLM that can understand the OpenAI API standard. So you’re free to experiment with any provider that you like, including those that run locally on your machine.

The OpenAI API compatibility also opens up access to newer providers and open-source models as they become available. You’re not locked into any single vendor’s ecosystem, giving you the freedom to adapt as the AI landscape evolves.

AI that is aware of your code

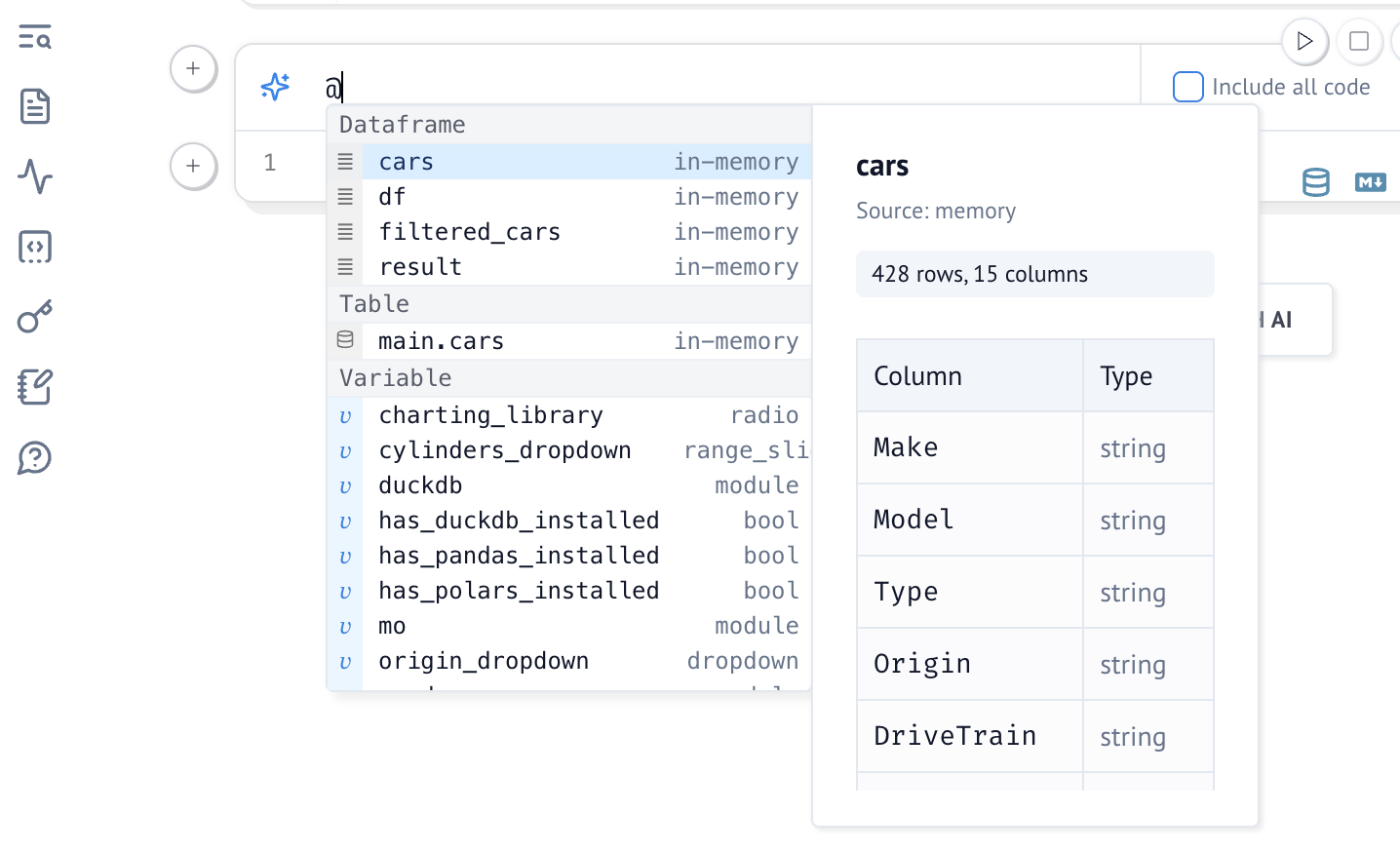

You can @ any variable that marimo has access to in order to add context. This includes dataframes as well as any tables in a connected database. This really helps with writing SQL as well as debugging.

As you can see, this also brings up some much appreciated autocompletion.

Want to give marimo a spin?

uv pip install marimo